This is, logical progression. Having spent the past year pushing a case for generative artificial intelligence (AI) tools, corrective measures based on how they’re being used, must follow. The biggest hazard to emerge in this time, as generative AI tools relentlessly kept getting better, are generated images and videos being passed off as real. Deepfakes, if you may. In just the past few days, social media networks including X have been swamped by explicit fakes of celebrities. First, Taylor Swift. Then, Drake. The sordid phenomenon even got its own term – Taylor Swift AI.

The fact that X (formerly Twitter) could only control the spread of Taylor Swift’s fake images by completely blocking any search for the phrase “Taylor Swift”, indicates how powerless their own systems were in checking the eventual virality. These scandals aren’t hurting X’s downloads, which even led owner Elon Musk to boast about leading the iOS download charts, on February 7. No one is quite sure where these generated images of Taylor Swift first emerged. Some point to a Telegram group. Others, a network called 4chan. In reality, a text-to-image generative AI tool was used to create these fakes. The social networks, though taking no content moderation responsibility away from them, a method towards uncontrolled spread.

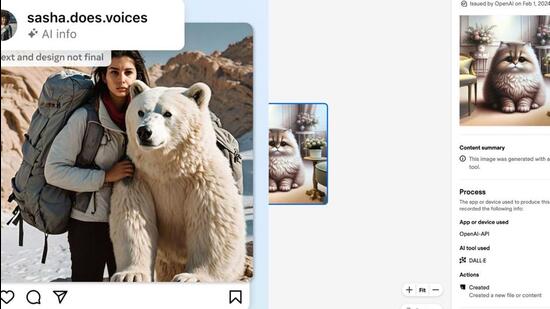

The timing may be coincidental, but social media platform Meta, and AI company OpenAI now say they’ll begin to include labels or watermarks to images generated using AI. “Since AI-generated content appears across the internet, we’ve been working with other companies in our industry to develop common standards for identifying it through forums like the Partnership on AI (PAI). The invisible markers we use for Meta AI images – IPTC metadata and invisible watermarks – are in line with PAI’s best practices,” says Nick Clegg, President, Global Affairs at Meta.

What Meta is saying is, they will look closely for otherwise invisible signals in images being shared by users on Facebook, Instagram, and Threads, otherwise generated using any of the AI tools available on the internet. On its part, the tech giant already watermarks and has visible markers on media generated using the Meta AI tool.

Also Read: OpenAI’s Sam Altman seeks to raise billions for artificial intelligence chip factories venture

“Meta’s initiative to detect and label AI-generated images shared on Facebook, Instagram, and Threads is commendable. This action aids in distinguishing between human and synthetic content, crucial for safeguarding user privacy and combating the proliferation of deepfakes. With recent incidents ranging from financial scams to celebrity exploitation, this measure is timely and essential,” Nilesh Tribhuvann, Founder and Managing Director, White & Brief Advocates & Solicitors, tells HT.

It wouldn’t be easy, though, since there are multiple junctions to navigate on this path. Industry standards for these visible and invisible markers accompanying each generative AI creation, will need deployment by all AI platforms, including those by Google, OpenAI, Microsoft, Adobe and Midjourney, to name a few. It is for generative AI tools to integrate these measures, which would allow Meta (and hopefully more social networks join in) to use these signals to have necessary labels on posts featuring AI content.

Content credentials, and the standards

Crucial are the “AI generated” information as part of digital signatures standard defined by the Coalition for Content Provenance and Authenticity (C2PA), as well as the widely used IPTC Photo Metadata Standard by the International Press Telecommunications Council.

Google is also joining the Coalition for Content Provenance and Authenticity (C2PA) to support the push for “content credentials” with generated content. “It builds on our work in this space – including Google DeepMind’s SynthID, Search’s About this Image and YouTube’s labels denoting content that is altered or synthetic –– to provide important context to people, helping them make more informed decisions,” points out Laurie Richardson, VP of Trust and Safety at Google.

Tech and photography companies including Adobe, Microsoft, Intel, Leica, Nikon, Sony and Amazon Web Services are part of the C2PA.

“In the critical context of this year’s global elections where the threat of misinformation looms larger than ever, the urgency to increase trust in the digital ecosystem has never been more pressing. We are thrilled to welcome Google as the newest steering committee member of the C2PA, marking a pivotal milestone in our collective effort to combat misinformation at scale,” says Dana Rao, General Counsel and Chief Trust Officer, Adobe and Co-founder of the C2PA.

“Google’s membership will help accelerate adoption of Content Credentials everywhere, from content creation to consumption,” he adds. It’ll be a multi-pronged benefit. Deep research investments alongside access to countless interactions by users across Google’s suite of apps with AI integrations. The most recent to join this party being the Gemini chatbot, now available with deeper integration on Android phones, Apple iPhone, as well as Gmail and Google Docs. This will further increase convenient ways for a user to access Gemini AI, further increasing usage.

OpenAI also says they’ll begin to add watermarks to content created using their image generator, Dell-E 3, whether directly in the tool or using the popular ChatGPT chatbot. This will support standards from the Coalition for Content Provenance and Authenticity (C2PA).

C2PA is an open technical standard that allows publishers, companies, and others to embed metadata in media for verifying its origin and related information. C2PA isn’t just for AI generated images – the same standard is also being adopted by camera manufacturers, news organizations, and others to certify the source and history (or provenance) of media content.

“Images generated with ChatGPT on the web and our API serving the DALL·E 3 model, will now include C2PA metadata. This change will also roll out to all mobile users by February 12th. People can use sites like Content Credentials Verify to check if an image was generated by the underlying DALL·E 3 model through OpenAI’s tools,” an OpenAI statement sets the timelines and methodology. They say adding a watermark or identification labels of some sort do not impact the time needed to generate an AI image, nor does it significantly impact the file size. If at all, the latter only makes a negligible difference in the size of a generated image.

Adobe’s idea: before its time, or perfect timing?

Adobe had the right idea, much earlier. In July last year, the tech giant had talked about transparency for digital content, as the company pushed the Adobe Firefly generative AI platform, standalone and integrated within its popular creative apps including Photoshop and Lightroom. They’d then called it ‘nutrition labels’. Now, content credentials are also part of any generations using Adobe’s apps on the Apple Vision Pro platform.

“As generative AI becomes more prevalent in everyday life, consumers deserve to know whether content was generated or edited by AI. Firefly content is trained on a unique dataset and automatically tagged with Content Credentials, bringing critical trust and transparency to digital content. Content Credentials are a free, open-source technology that serve as a digital “nutrition label” and can show information such as name, date and the tools used to create an image, as well as any edits made to that image,” Adobe had said, at the time. They’ve persisted since.

“In this era of widespread misinformation, it’s important to have a transparent approach to ensure that consumers have the information necessary to make informed decisions about the trustworthiness of digital content, as well as brands and creators to establish trust in their content,” says Matt Arcaro, Research Director, Computer Vision and AI at IDC Research.

It may come as a surprise, but camera makers are only more than willing to include details of an image. In October last year, Leica introduced the world’s first camera with Content Credentials integrated. That was the new Leica M11-P camera, adding a layer of authenticity at the point of capture itself. Sony has also said they’ll incorporate Content Credentials into its Alpha 9 III line of cameras, as well as the Alpha 1 and Alpha 7S III models, through firmware updates. Nikon is working on similar functionality for its next line of cameras, as well as updates for compatible existing ones.

Yet, are labels and watermarks a definitive solution? Unlikely. Meta knows it too. They say it’s imperative to continue working to develop classifiers that can help to automatically detect AI-generated content, even if that specific content lacks invisible markers from the outset. They’re also looking for ways to make it more difficult to remove or alter invisible watermarks, which creators would inevitably attempt to do.

“This work is especially important as this is likely to become an increasingly adversarial space in the years ahead. People and organizations that actively want to deceive people with AI-generated content will look for ways around safeguards that are put in place to detect it,” says Clegg.

White & Brief Advocates & Solicitors’ Tribhuvann believes regulation is important. “While Meta’s efforts are a positive step, governmental oversight remains imperative. Robust legislation and enforcement are necessary to ensure that all social media platforms adhere to stringent regulations. This proactive approach not only strengthens user protection but also fosters accountability across the tech industry,” he says.

Crucial will be, whether the embedded metadata and identifiers in generated images, can be removed (whether easily or with some persistence) or not. A method is to go to the hardware level, when possible. Qualcomm’s latest chip for smartphones, the Snapdragon 8 Gen3 mobile platform, supports Content Credentials in camera systems and is based on the global C2PA standard format. But it’s easier said than done.